Vendors tend to lock down the type of transceivers you can use on their SFP/SFP+ and QSFP ports. They do this for a number of reasons but mainly in the spirit of support and quality (which I can understand). there are a number of guidelines around agreed upon by networking vendors that fall under Multi-Source Agreement (MSA). MSA specifications will dictate many physical characteristics, but not necessarily the electrical designs. For that reason, a transceiver may work in one switch/module, but not in another due to design differences not taken into consideration. Vendors will say that many low-cost products do not properly code the MSA required fields for type, distance, media type among other fields, or they may incorrectly identify the part, causing the switch to enable them with settings not appropriate for the type of transceiver inserted.

TL;DR it’s basically, use unsupported transceivers at your own risk and if it’s found to cause an issue, it won’t be supported.

I’ve got some 10gig going now in my home lab environment between my Aruba lab gear and Ubiquiti UDM-PRO, whilst it was pretty much plug and play on the Ubiquiti side (I am using their transceivers), on the Aruba I had to go and fiddle in the console to get it working. If you’re not managing via Aruba Central, it is a straight forward command.

Aruba-2930F# allow-unsupported-transceiver Warning: The use of unsupported transceivers, DACs, and AOCs is at your own risk and may void support and warranty. Please see HPE Warranty terms and conditions. Do you agree and do you want to continue (y/n)?

Hit Y to confirm

If you’re using Aruba Central, you’ll just also need to enable support mode.

Aruba-2930F# aruba-central support-mode enable Aruba-2930F# allow-unsupported-transceiver Aruba-2930F# write memory Aruba-2930F# aruba-central support-mode disable

Once done, reboot your switch and the Transceiver will come online and begin to operate (unless it’s too cheap and faulty…), you can verify it’s there by issuing the following command

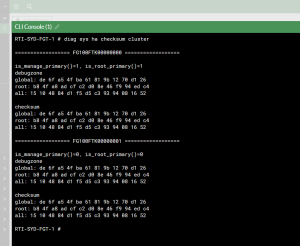

# show tech transceivers Cmd Info : show tech transceivers transceivers Transceiver Technical Information: Port # | Type | Prod # | Serial # | Part # -------+-----------+------------+------------------+---------- 10 *| SFP+SR | ?? | unsupported |

In my case, I can see the transceiver but no information.